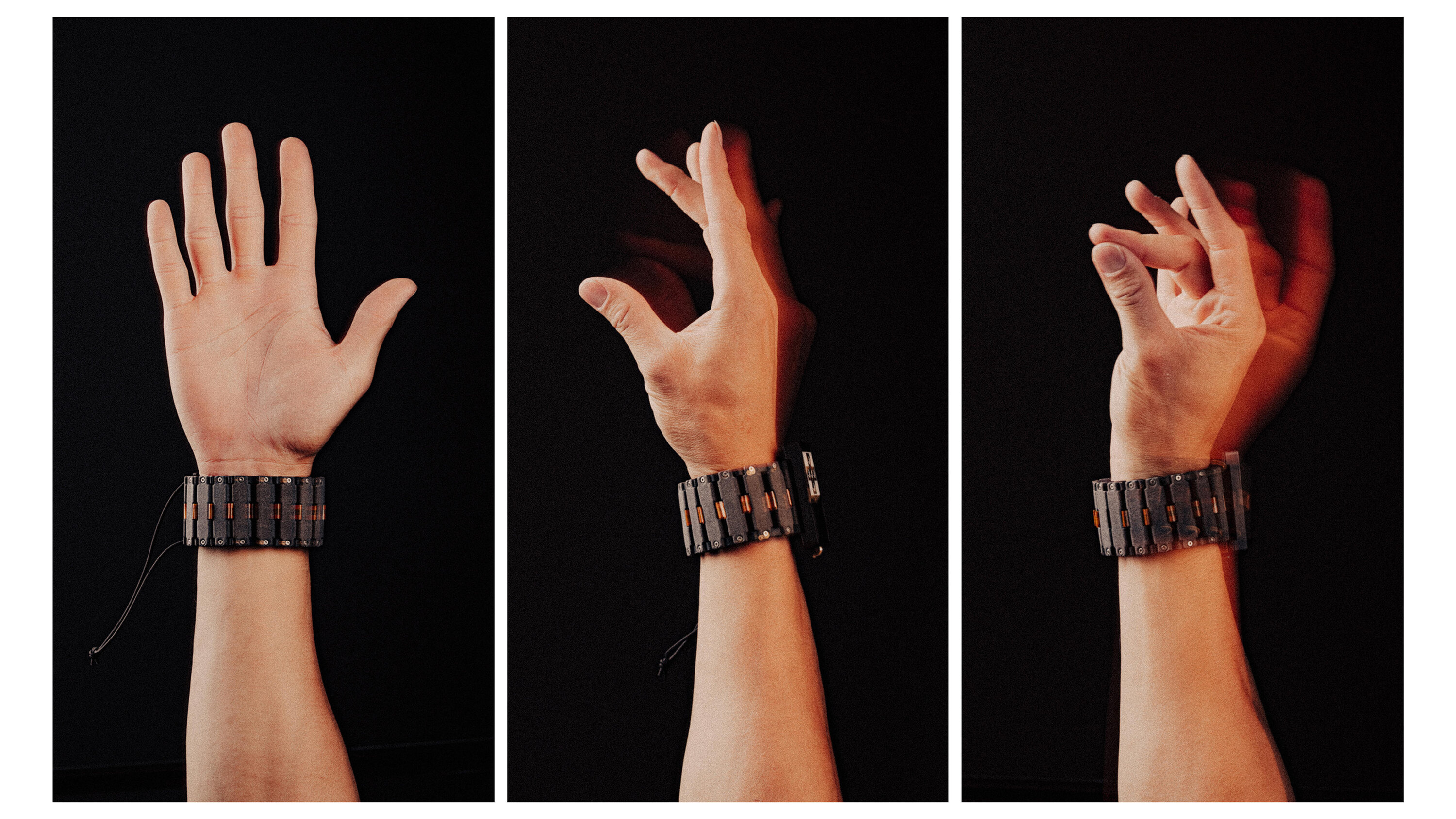

Meta’s Reality Labs unveiled a cutting-edge wristband that uses surface electromyography (sEMG) to detect subtle electrical signals from the wrist, allowing users to control computers with simple gestures swipes, taps, and pinches without touching a keyboard or mouse.

The device can also recognize handwriting in the air, send messages, launch apps, and navigate menus by interpreting motor nerve signals before any physical movement occurs. It was tested with Orion AR glasses but could also work with laptops, tablets, and smartphones.

Importantly, this non-invasive tool is designed to enhance digital access for users with motor challenges. Researchers from Carnegie Mellon are testing it for people with spinal cord injuries, as the tool can tap into residual muscle signals even when fingers are paralyzed.

This wristband signals a major shift in human-computer interaction. By turning muscle activity into digital commands, it offers a new, intuitive way to interact with technology—no visible gestures, no screens. Meta says it plans to launch the device with its next smart glasses by late 2025.

The innovation could benefit all users offering private messaging in public, hands-free use, and broader accessibility without requiring surgery like brain implants. It marks a significant step toward natural, neural-controlled computing.

Tags:

Post a comment

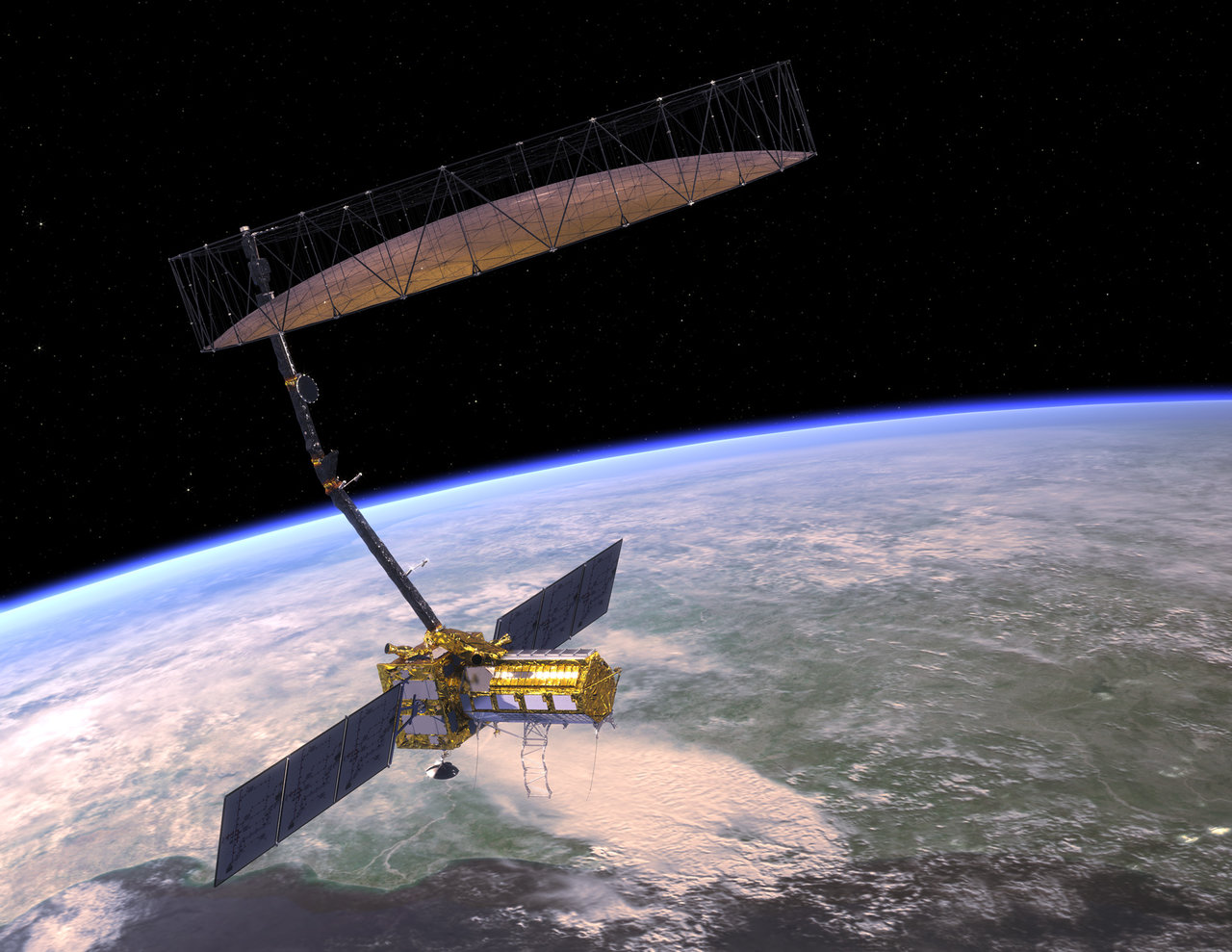

Why is ISRO building a new rocket launch pad?

- 29 Aug, 2025

- 2

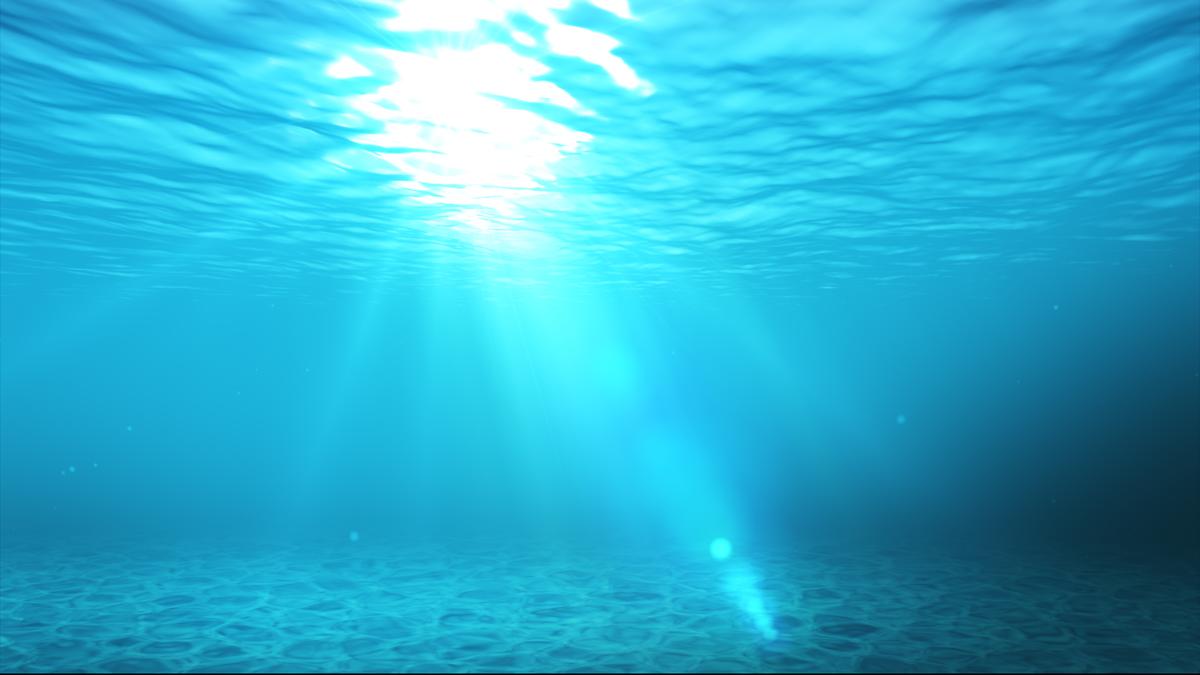

Can deep ocean water really cool the world’s data centres?

- 27 Aug, 2025

- 2

Can U.S. Robot Cargo Planes change the balance in Indo-Pacific?

- 28 Aug, 2025

- 2

Killer specs, Killer price’: Redmi targets budget buyers!

- 29 Jul, 2025

- 2

Hydrogen vs Battery: Which cuts cost for clean buses?

- 30 Jul, 2025

- 2

Categories

Recent News

Daily Newsletter

Get all the top stories from Blogs to keep track.